HiRISE

This project was written by me as part of the "Introduction to Computer Graphics" course in summer 2020 at the University of Bonn. The time-frame for this project was 6 weeks.

It is a short action film set in and around a skyscraper on a Mars landscape. The rendering is done with a scratch-built mini-engine written in C++ and OpenGL.

This project is entirely open source, and you can find source code on my GitHub. It should run on any Linux machine with OpenGL and irrklang installed. I won't guarantee anything though.

How it was done

Planning

As this was going to be something of a short movie, the first step was to storyboard (click to see my amazing drawing skills) and to come up with a rough overview of it in writing, which looked something like

The protagonist stands up from his desk and looks around - sirens sound, and he runs towards the window.

On hist way to the window, he is followed by a group of people and

does parkour over tables and other objects.

He breaks through the window, and we can see the building from the outside, which stands on a science-fiction Mars landscape. The protagonist then jumps across to another building and runs away. The protagonist falls in slow motion while the camera continues to move.

Then it was time to think about the by far biggest chunk of work, the implementation, and which features the engine should actually have. Here is a list of all of the ones that I thought of back then with the ones I did not end up implementing crossed out.

Main features

- Minimal Cinematic Engine

- Export files from Blender, import into HiRISE

- use dae/obj

- protobuf or json as file-format? Custom (floats in File, space-separated)

- Camera position, Skeletal animation

- Save camera positions in file

- Rigging for humanoids

- has to work well for realistic movements

- Physical simulations for glass shards

- was simulated in Blender, then imported

- Reflection in glass

- First, for the entire plane by duplicating the scene

- Other technique for many shards

- Reflect environment map

- Re-render environment map in real-time - not needed here for convincing results.

- e.g. SS (Screen Space) (partially missing scene), Ray-tracing (to expensive for real time), Environment Map

- Displacement Mapping + PDS Parser

- Load height data and color from PDS files

- Mars surface from HiRISE-data

- Code for sound exists but ended up not being used in a final film.

Optional Features

- Dynamic Subdivision Surface of the Mars surface

- Quality and performance improvement

- Procedural Textures for Mars-surface

- Color for surfaces without color information from HiRISE

- Didn't work too well

- HDR-Effects, motion blur, focus blur

- Increasing quality, if time is left over (priority decreasing)

Implementation

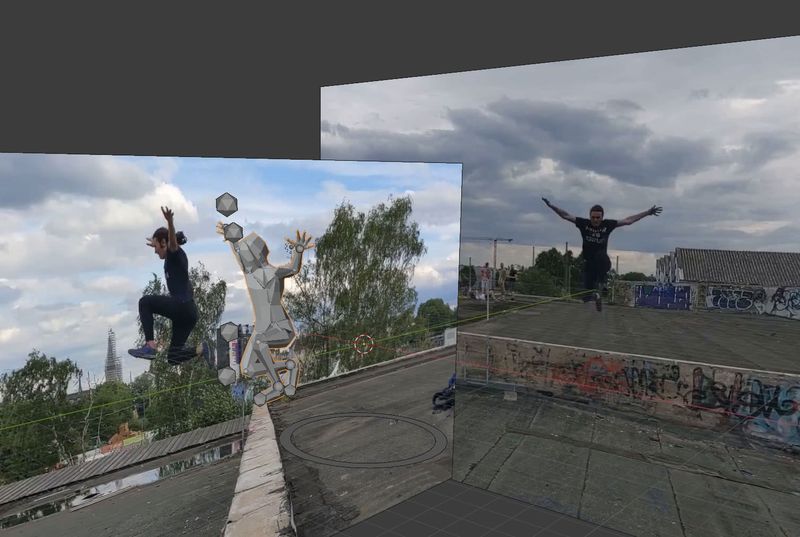

Starting with the animation in blender, I used reference videos for more realistic results. These were taken specifically for HiRISE of my friend, who is quite good at parkour. We filmed with two cameras at the same time from two angles. One from the front and one that would be exactly the side in the most interesting moment of action.

These were used in Blender as background when animating with inverse kinematics.

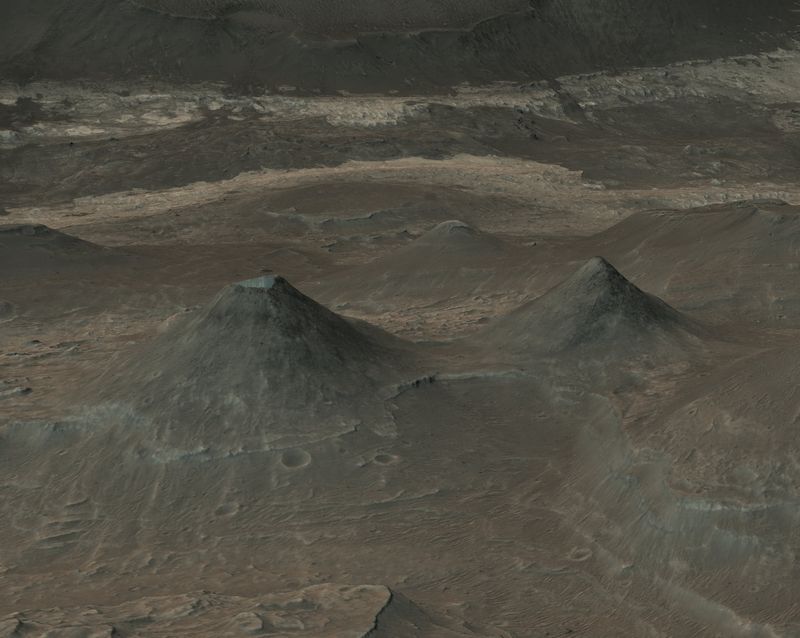

For the surrounding Mars surface, I used height maps and black-and-white images from the High Resolution Imaging Science Experiment (HiRISE, this is where the name for the project comes from).

The exact dataset used can be found at https://www.uahirise.org/dtm/dtm.php?ID=ESP_048136_1725. The highest resolution images I used are saved in the pds format, for which I wrote a decoder. Luckily this format is not too difficult if you just want to get at the raw data.

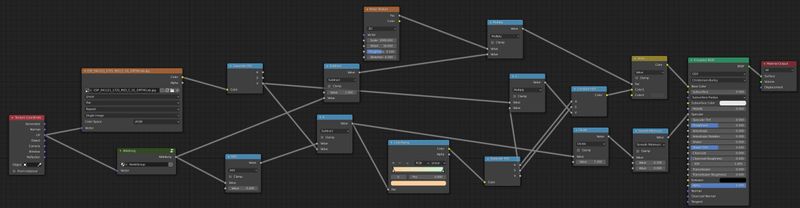

To combine both the height map and the black-and-white image into a 3D-model with color, I used a reference image with color from a different part of Mars to create a node-network in Blender to generate the color for each position.

In Blender it is possible to tune parameters and functions in real-time to quickly iterate on the desired result. That allowed me to fine-tune the look that I was going for and match it to some color images I found.

This resulting node-network was then translated to shader code for use in HiRISE.

void main() {

// get TextureCoordinate

vec2 tc = TexCoord_FS_in;

// get the value from the texture

float a = texture2D(tex, tc).r;

// sqrt of slope

float d = sqrt(derive(tc,h));

// hand tuned values

float b = d+0.2-a;

// convert to rgb, then mix the two constant colors

// based on the value of b at this point

vec4 cola = hsv2rgb(vec4(colorA, 1.0));

vec4 colb = hsv2rgb(vec4(colorB, 1.0));

vec4 colorRamp = mix(cola, colb, b);

// then convert back to hsv

vec4 colorRampHSV = rgb2hsv(colorRamp);

// multiply the calculated value by the texture value

float e = colorRampHSV.z*a;

// convert back to rgb

vec4 col = hsv2rgb(vec4(colorRampHSV.xy,e,colorRampHSV.w));

// fall back to transparent to avoid artifacts

if(texture2D(tex,((((tc*2)-1)*discardFactor)+1)/2).r == 0.0){

gl_FragDepth = 1.;

frag_color = vec4(0.,0.,0.,0.);

}else{

gl_FragDepth = gl_FragCoord.z;

frag_color = vec4(col.rgb*irradiance, 1.0);

}

}

The UI for animating via keyframes and setting parameters was made using imgui.

Most were developed as a single variable needed to be tuned in real-time. They were added one by one as the feature behind them was developed. Because most elements are single use, they can be enabled or disabled as needed.

The camera is moved along splines, which are defined by points traversed by the camera.

This is the first test of the system, where the tangents aren't set correctly, which results in jittery motion.

The camera points are pairs of time and position, which are sorted by time and saved in a std::vector<std::pair<float, std::vector\<float>>>.

A curve is interpolated between the ith and the i+1st point by passing the points i-1, i, i+1,and i+2 and the global time to eval

eval first computes the slope at the control points of p1 and p2, which are (p2-p0).normalize() and (p3-p1).normalize(), if p1 is between p0 and p2 and p2 is between p1 and p3, otherwise they are limited to the x-coordinate.

This is done, so the maxima and minima have symmetrical extrema.

T eval(double t, pair<float, T> P0, pair<float, T> P1,

pair<float, T> P2, pair<float, T> P3)

{

T out;

t = (t - P1.first) / (P2.first - P1.first);

for (uint i = 0; i < size; i++) {

double slope1 = 0;

if ((P0.second[i] - P1.second[i]) * (P1.second[i] - P2.second[i]) > 0) {

// if p1 between p0 and p2

slope1 = (P2.second[i] - P0.second[i]) / (P2.first - P0.first);

if (fabs(slope1 * (P0.first - P1.first) / 3.) >

fabs(P1.second[i] - P0.second[i]))

slope1 = 3 * (P1.second[i] - P0.second[i]) / (P1.first - P0.first);

if (fabs(slope1 * (P2.first - P1.first) / 3.) >

fabs(P1.second[i] - P2.second[i]))

slope1 = 3 * (P1.second[i] - P2.second[i]) / (P1.first - P2.first);

}

double slope2 = 0;

if ((P1.second[i] - P2.second[i]) * (P2.second[i] - P3.second[i]) > 0) {

// if p2 between p1 and p3

slope2 = (P3.second[i] - P1.second[i]) / (P3.first - P1.first);

if (fabs(slope2 * (P1.first - P2.first) / 3.) >

fabs(P2.second[i] - P1.second[i]))

slope2 = 3 * (P2.second[i] - P1.second[i]) / (P2.first - P1.first);

if (fabs(slope2 * (P3.first - P2.first) / 3.) >

fabs(P2.second[i] - P3.second[i]))

slope2 = 3 * (P2.second[i] - P3.second[i]) / (P2.first - P3.first);

}

double ct1 = (P2.first - P1.first) / 3;

double cx1 = P1.second[i] + slope1 * ct1;

double cx2 = P2.second[i] - slope2 * ct1;

out.push_back(extrap(t,

extrap(t, extrap(t, P1.second[i], cx1), extrap(t, cx1, cx2)),

extrap(t, extrap(t, cx1, cx2), extrap(t, cx2, P2.second[i]))));

}

return out;

}The lighting outside the building is done using an HDRI, like in https://learnopengl.com/PBR/IBL/Specular-IBL. Those shaders are used in modified form for PBR-objects.

Importing skeletal animations is somewhat fragile and needs special care when exporting from Blender, to avoid scenarios, such as:

The bones are imported recursively from the hip by concatenating the transformation matrices per frame, so that if, for example the arm moves, the hand also moves accordingly.

Here is how the beginning of the animation looks in Blender.

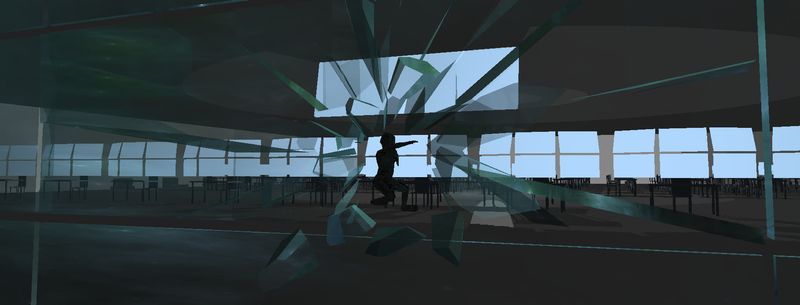

For the large mirror with real-time reflections, the scene is rendered twice. Once normally and once reflected and with a stencil in the form of the mirror.

In the main file, this is done around line 960.

For all the small glass shards, only the environment map is reflected in them. This is done entirely in the shader code.

The Mars surface is entirely created from textures and the actual model therefore is only a relatively high-resolution rectangle.

This high-resolution is needed, as the Nvidia gtx 1070ti used for rendering only supports a maximum tessellation level of 64, which is not enough to tessellate a plain rectangle for the Mars surface.

The color texture is precomputed in a compute shader to save on performance at runtime. This optimization brought a performance increase of almost 10x, as calculating the colors is fairly expensive.

The table, chair, screen, and USB-Stick objects were modelled in Blender by me by combining primitives and editing the resulting mesh. The text at the end was also created in Blender by triangulating a text-object.

Originally, the plan was to create a full simulation for the glass and have it shatter and interact with the scene.

This was however not possible in the timeframe of the project and I used the Blender Add-on Cell Fracture and the rigid body simulation in Blender to replace the simulation.

Cell Fracture is used to initially shatter the glass and each resulting object is simulated in the scene using Blenders rigid body simulation.

For a faster development cycle of shaders, I implemented a shader class with the reload function, which reloads the shader code, and checkReload, which checks, if the file was changed since the last load/reload.

With these two functions, it is possible to check if the shader code needs reloading and if it does, to reload it.

These steps need additional delay, as writing a file to disk takes some time and loading a partially written shader file result in crashes as the code is only partially updated.

The reloadCheck function is responsible for this and waits 20ms, before loading the shader.

This increases the frame time for this single frame, but this is ok for development, as shaders normally are not reloaded every frame.

To mitigate the low pixel density of the HiRISE data, I experimented with adding different types of noise to the texture.